Announcing The GeForce RTX 5060 Desktop Family: DLSS 4 Multi Frame Generation, Neural Rendering & Blackwell Innovations For Every Gamer, Starting At $299; Plus RTX 5060 Laptops Available In May

Even looks afordable.

RTX 5060s Announced.

|

RTX 5060s announced.

Yeah, no

On every level, lol. Not affordable. Not available. Nonexistant.

Yeah - 8gb cards shouldn't have even been a thing. Games are getting too beefy.

shouldve been 12gb and 16gb models - Nvidia is very cheap with the vram. Those are all 300$ cards in my opinion. Aw yeah a brand new beefy card for me to play XI with.

still cant believe I snaged this last year for this price and now the 5060 alone is this price... yay me

You can't make a 12GB variant of a GPU if the RAM is only available in 8GB configurations. nVidia sources that from outside manufacturers so it's not always up to them.

I'm all for throwing rocks at nVidia but I reserve that for things like shitty driver support, melting cables/connectors and marketing shenanigans. That said, it's not a good look when your entry/low tier GPUs have as much or more VRAM than your mid/high tier GPUs. I mean, ram aren't exclusively built as 8 gb or nothing.

They're 1gb/2gb blocks. You can have 4gb cards 6gb cards 8, 10, 12, 14, 16 whatever you want. Just an extra solder block away. Asura.Eiryl said: » I mean, ram aren't exclusively built as 8 gb or nothing. They're 1gb/2gb blocks. You can have 4gb cards 6gb cards 8, 10, 12, 14, 16 whatever you want. Just an extra solder block away. This isn't true, 5060Ti has a 128-bit memory bus. If you throw 16GB in it (8x2GB modules) you're losing a considerable amount of potential throughput to do so because of shared interfaces. Each module is ideally operating with 32 bits of bus space for full throughput. Mixing clock speeds is an architectural nightmare, so once you go past 8GB it makes no sense not to go all the way to 16GB. Choices made, didn't have to be made.

192 gives you 12 gb Choices come with cost, wider memory bus isn't free. Nothing stopping them from giving it the 5090 treatment with a 512 bit bus and doing whatever they want with VRAM.

Entire GPU market just comes down to balancing what you'll pay for, if they give you too much on the cheaper models they won't get to sell the expensive ones. That's obviously the game. But it could have been done was all I said.

If they could get away with it they'd only sell 5090 supreme oc ti super dom plat +++ for 10 grand. I don't think there's some inherent need to have 12GB, though. 8GB is sufficient for 1440p, which is the best you're doing without DLSS or framegen on a 5060Ti anyway. If they decide to go 12GB, they're going to be picking between 12 and 24 and 24 would be overkill.

Really don't think the 8/16 split is a problem, personally. It seems fine from a design perspective. Well the point wouldn't be that you need 12 now, if you're buying a 5060 you're likely to hold it until 7060(8060, 9060) and 12gb would possibly be needed sooner than later.

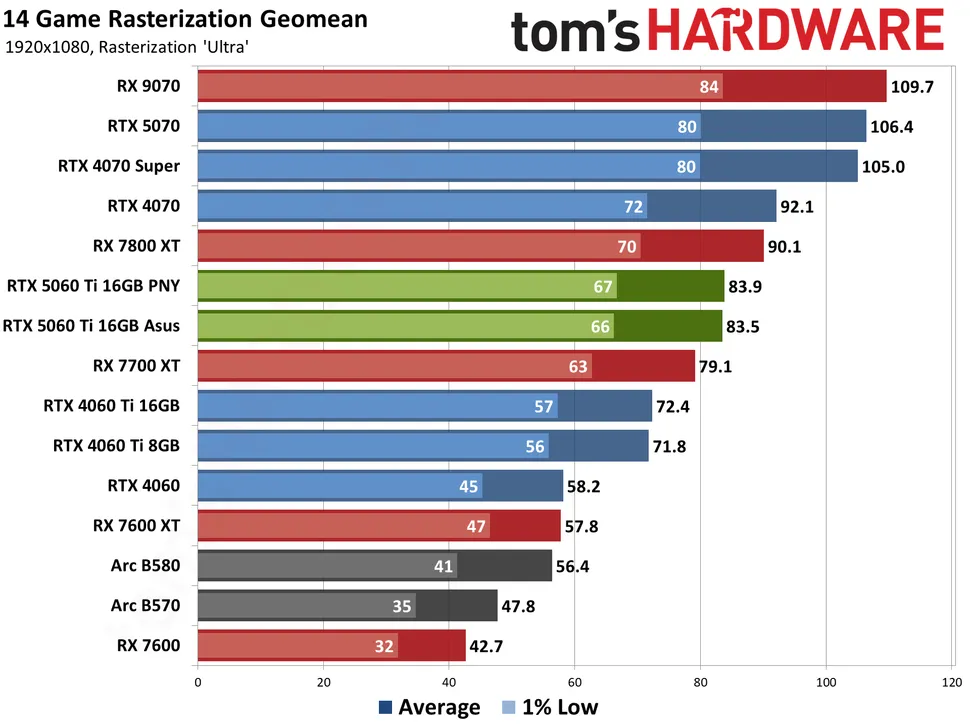

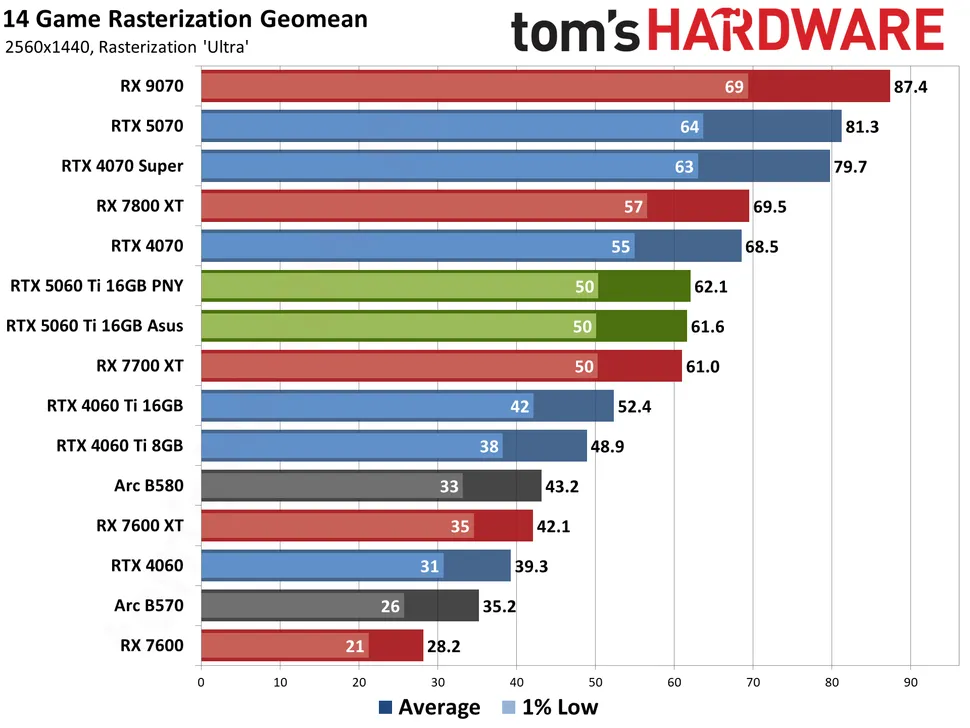

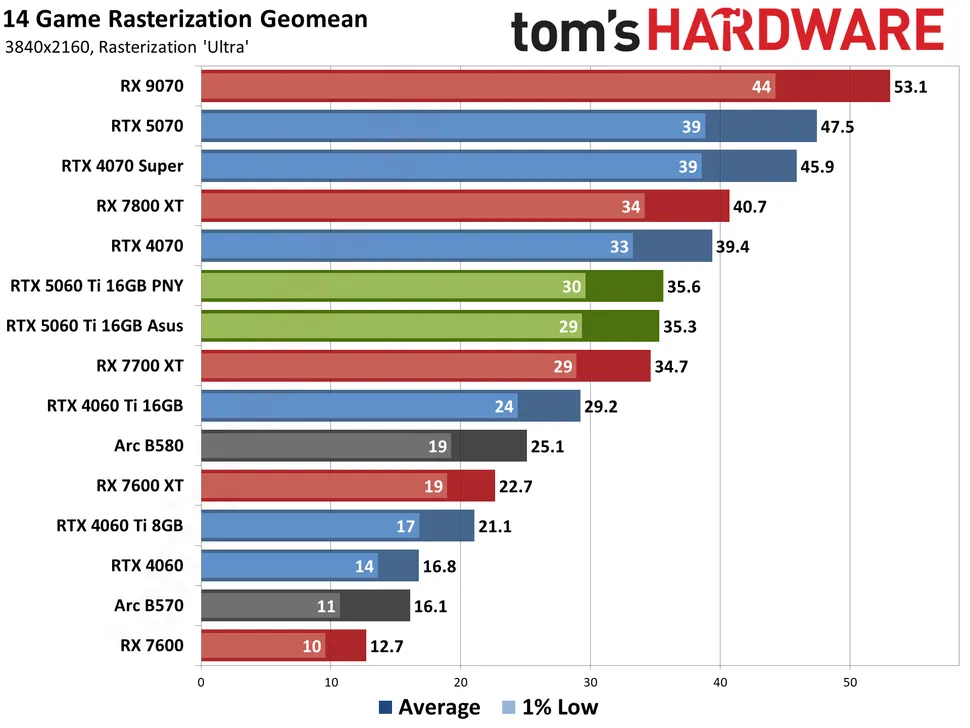

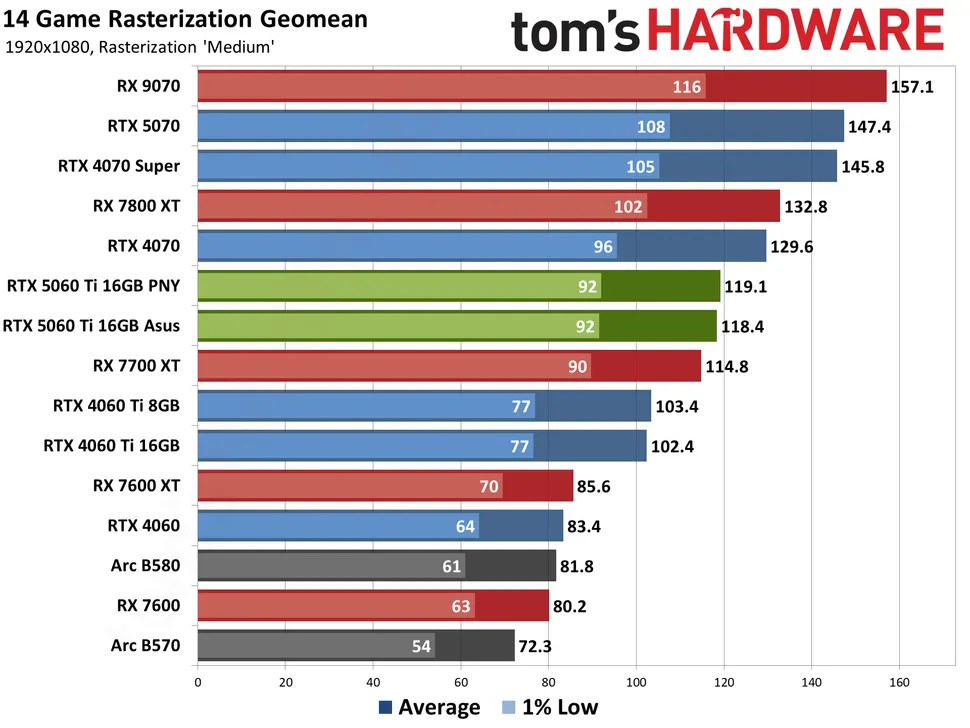

People buying **60 aren't the ones buying every generation. They're the ones going from 1060 to 5060 because their 1060 finally stopped working and B570 are too scary to work with. then we're back to, well just buy the 16gb, it's only $50 more. But then just buy the 5070, it's only $50 more than that. Then we've come full circle, Why do 8 gb models exist. They don't want you to buy them. Bismarck.Nickeny said: » still cant believe I snaged this last year for this price and now the 5060 alone is this price... yay me  This price is MUCH cheaper than average tbh. Most 4060 laptop that I've found cost $900-$1000. Bismarck.Nickeny said: » Yeah - 8gb cards shouldn't have even been a thing. Games are getting too beefy. shouldve been 12gb and 16gb models - Nvidia is very cheap with the vram. Those are all 300$ cards in my opinion. Not true, it depends on resolution and most importantly settings. https://www.tomshardware.com/pc-components/gpus/nvidia-geforce-rtx-5060-ti-16gb-review My buddy Jarred included both the 8 and 16GB versions of the 4060Ti for comparison. 1080p "ultra melt my PC", both cards are around the same playable average / lows.  1440p "ultra melt my PC", now we start to see the 16GB card pull ahead slightly, but both are now into the "WTF you doing" range of performance with sub 60fps averages and ugly lows. This is the situation where it would behove the player to change the settings from 'ultra" to "high" or "very high" and pull the FPS up a bit.  2160p "ultra melt my PC", now things get really ugly. The 16GB card has a substantial difference but both cards have you in very unplayable sub 30fps.  And for reference 1080p "medium", both cards are 100+ with lows being 70+ so smooth experience.  I asked Jarred to include "high" instead of medium next time if it was possible because people playing with a lower mainstream 8GB card are the ones most likely to be playing at 1080p/1440p and choosing "high" or "medium". Would be nice to see the dividing line since there is a massive difference in texture size between medium, high and ultra/legendary/fusion reactor settings. Jarred has mentioned before that he didn't see differences at 1080p/1440p high for the 8 vs 16GB thing. Seriously people need to take youtubers with a grain of salt, they review everything at "max settings" to compare relative performance between $2000+ USD cards at $400 USD cards. Expecting a $400~500 USD card to function on the same settings at a $2000+ is absurd. Benchmarks. Because people who run B570 on a 9800X3D needed validation. Peak gaming performance!

damn - doesnt even beat last gen 70's - lmao...

K123 said: » If they used 3gb modules they could have done 12gb+18gb configurations. Rumour is they refused to pay the relatively high cost for 3gb modules but will for next gen. The 5060 is a low tier GPU. It's supposed to provide good to adequate performance based on resolution/settings/features at a low cost. I get that 'higher number better', but this also applies to pricing. The majority of the intended audience for these cards will feel the hit to value more than they will feel the uplift in performance, especially in the current market. If you have a genuine need for 12GB VRAM then you really should be looking at cards that offer that as a baseline and improve from there with the OC/TI/Super versions. K123 said: » The problem is monopoly and faked scarcity to keep prices high Unlikely to be faked. According to TSMC earning calls their 3nm and 5nm chip production are reaching 100% production capacity in Q1. They can't lie to shareholders on this matter because it's illegal. 5060 uses 5nm so it's likely that the scarcity is caused by high chips demand from the AI industry. Unless AI industry slow down hardware demand(very unlikely) or 5nm-3nm production capacity increase (this takes time) the scarcity is probably real. The chip demand coming from AI industry growth is THAT huge. K123 said: » The 5060 8GB at $300 would be fine. It'll sell for $600+ and this is the problem. They're 420* and 480* respectively, if you exclude the premium models with OC, white PCB, RGB, ect. Obviously can't really speak on the performance difference to know if the 16GB is making up that price gap as performance, but that would be impressive. I think these will be much closer than that with few exceptions. Bismarck.Nickeny said: » damn - doesnt even beat last gen 70's - lmao... The 3060 was close in performance to the 1080, the 4060 was close in performance to the 2080, so it stands to reason that the 5060 should be similar in performance to the 3080. K123 said: » If they used 3gb modules they could have done 12gb+18gb configurations. Rumour is they refused to pay the relatively high cost for 3gb modules but will for next gen. glad im stupid and have money for my egg cooker

omelets on nick Will I be able to run FFXI on this????

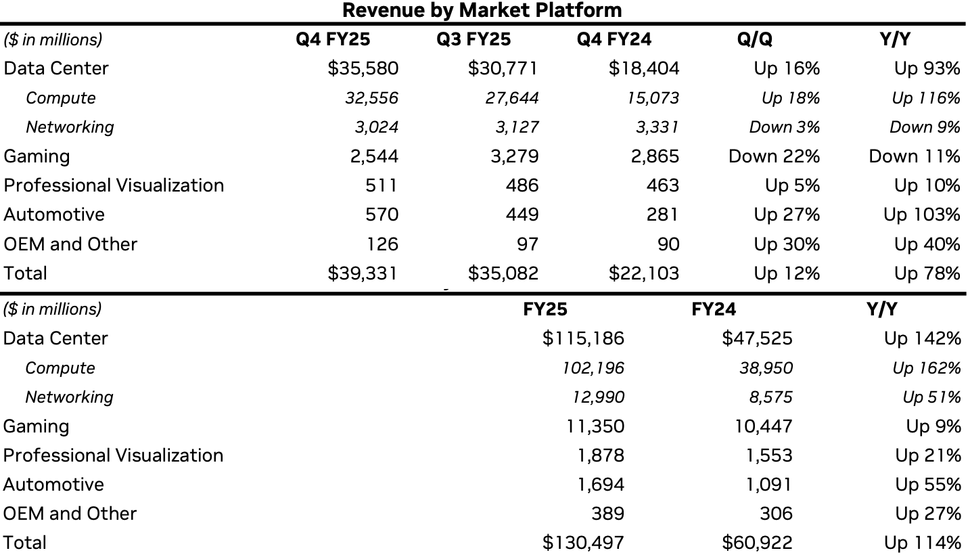

That is the market. Real inflation in the past five years has been brutal. AIB card manufacturers already have super thin margins on these cards, that is why they are throwing in all those RBG/OC/blah blah and bumping up the price $100 or more. This is further complicated by Nvidia no longer being a gaming GPU company. https://www.tomshardware.com/pc-components/gpus/nvidia-enjoys-usd130b-annual-earnings-despite-gaming-segment-supply-constraints  Let those numbers sink in for a moment. NVidia could cancel their entire consumer gaming division and it would barely effect them. |

All FFXIV and FFXI content and images © 2002-2026 SQUARE ENIX CO., LTD.

FINAL FANTASY is a registered trademark of Square Enix Co., Ltd.